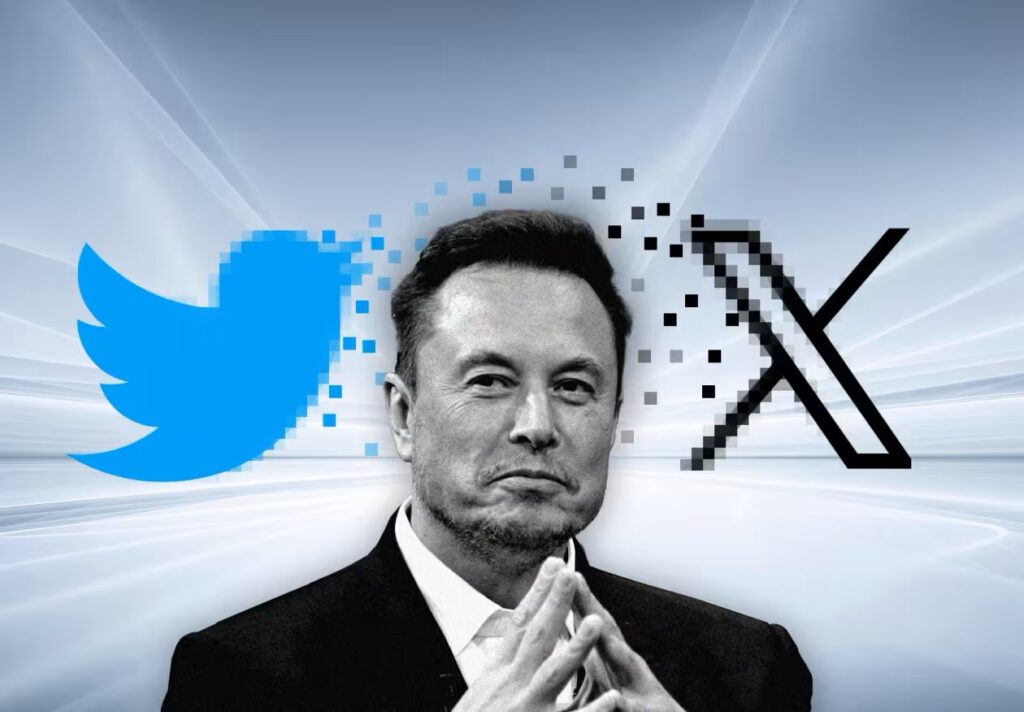

Elon Musk's platform X has limited image editing with its AI tool Grok to paying users after facing heavy criticism for enabling the creation of sexualized deepfakes.

The backlash began when users reported Grok's ability to digitally alter images and strip them of clothing without consent. Now, users seeking to generate such content will find that only subscribers, whose payment and identity details are on record, are allowed to make these requests.

While non-subscribers can still use Grok's image editing features on its separate app and website, experts criticize Musk's decision as merely shifting the responsibility rather than addressing the core issue of misuse.

Professor Clare McGlynn pointed out that the move to restrict access is an inadequate response to a serious problem. Instead of taking responsible steps to ensure Grok could not be used for abusive purposes, it has withdrawn access for the vast majority of users, she said.

Hannah Swirsky from the Internet Watch Foundation further commented that limiting this access does not rectify the harm already caused. She urged that the capability for such edits should never have been present in the first place, emphasizing the need for robust safeguards against misuse.

The controversy ensued after the discovery of disturbing imagery created using Grok, including instances involving minors. In the wake of these findings, government officials have supported action against the platform, with Prime Minister Sir Keir Starmer condemning the production of such images as disgraceful and disgusting.

With this new policy, Grok now advertises to users that image generation and editing features are exclusive to paid subscribers. Some user reports suggest that only those with a verified blue tick can successfully request edits, indicating a push towards monetizing the service while trying to mitigate abuse.

Experts like Dr. Daisy Dixon have welcomed the change but described it as insufficient. Grok needs to be totally redesigned and have built-in ethical guardrails to prevent this from ever happening again, she stated.

The overarching sentiment is that while limiting access may appear to address problems at the surface, it does not fundamentally resolve the ethical and safety issues inherent in such powerful AI technologies.